Welcome to My Development Portfolio

I have extensive knowledge and experience working with Python and a multitude of other Libraries. See below for specifics on coding experience and examples of work. Also click the link below to view my GitHub Portfolio

Languages & Experience

Python

Libraries/APIs : ArcPy, ArcPy.Mapping, Pandas (TONS of experience with Pandas), GeoPandas, Seaborn, Sklearn, Matplotlib, GDAL, Google Earth Engine, GeeMap, Leafmap, CartoFrames, Plotly, Folium, SNScrape, Twython, Twillio, Overpass API (OSMNX), ADSB Exchange, Queries on the Census API, Terrascope SDK (Propriety), GoUtils (Proprietary) , Pyspark

IDE’s: Jupyter Notebooks, Pycharm, IDLE, VS

Frameworks: Flask

Level of Comprehension: 8/10

R

Packages: Leaflet for R

IDE’s: RStudio

Level of Comprehension: 2/10

Javascript

Libraries/API’s: Leaflet js, Mapbox GL js, ESRI Javascript API

Frameworks: Bootstrap, JQuery

IDE’s: Brackets, Visual Studio, Pycharm

Level of Comprehension: 4/10

SQL

Applications: MySQL, ArcGIS SQL Editor, PostGIS, PostGreSQL, SQLite (Paired with Flask)

Knowledge of: single table queries, multiple table queries

Level of comprehension: 6/10

HTML/CSS

Knowledge: HTML 5, Bootstrap 5

IDE’s: Visual Studio, Brackets, Flask (Python)

Level of Comprehension: 6/10

PHP

Some experience…Little understanding of the writing code, but a decent understanding of how it works…learning more about server side scripting as I develop more web applications.

Amazone Elastic Map Reduce (EMR) Notebooks (Python + Pyspark)

As a solutions engineer @ Orbital Insight I worked to maintain our off-platform workflows for MAJOR clients in effort to maintain our professional services portfolio, I ran a multitude of pipelines via Amazon EMR, as well as created and updated a multitude of Notebooks written using the Pyspark Library. The notebooks were mainly used in conjuntion with Spark because of the enormity of the datasets we were pulling, packaging and writing functions against

Database Management/Server Side Technologies & WebDev Framworks

PostGreSQL and PostGIS (Local Instance + Amazon RDS Instance)

Used for personal application development for a Strava-like web GIS technology that I am currently in the processing of creating. Have had multiple exposures to incorporating data into these systems through ArcGIS, AWS, and QGIS.

ArcSDE

Currently run on ArcGIS Enterprise at work. While I have little experience with the back end, I do have experience managing the enterprise database and fixing common errors associated with portals and licensing.

GeoServer

Used at work on an AWS instance. Basically a data store for the majority of the software products offered by our company

Flask

Currently working on Deploying a Web Application, developed via Python, Flask (MySQL) onto AWS.

Live Applications

CoronaVirus Hub

In my personal time, I took advantage of the vast amount of geospatial data that was being published by organizations such as ESRI and Johns Hopkins. I decided to create my own web map using a live geojson layer from ESRI (published daily) HTML, CSS, and the Mapbox GL JS library. The website shows both the number of confirmed cases per region (clusters represent regions), and the number of confirmed Corona-related deaths. I have the pleasure of saying that the data on the map undergoes live updates. The table under the legend it most likely inaccurate. See the application below.

Panorama Viewer + Leaflet

I began creating this application for my firm after getting a request from a client through which they needed an online portal to host their panoramas that were captured by our flight ops team for a special project. Our dev team in Malaysia had been building the portal, but seemed to be taking too long (the deadline agreement for completion was fast approaching). I took it upon myself to build the application on my own. It was simple really, but everything needed to be very precise. I knew I had all the tools I needed to do so, it was just a matter of how much I could focus. I used HTML, JS, CSS, the Leaflet JS API, and Marzipano to create the application. While my boss refused to allow it to be shown, I believe in the end that it was a truly better product than the one we ended up delivering. It did what it needed to do. Functionality and quality were apparent. I was proud of myself. A note to those interested in viewing the application - I have made this GitHub repository private for client purposes. If you wish to see it, please reach out and request access. I can provide credentials.

Beta Applications

Raceday

Raceday, while currently still undergoing significant development, will be a utility for runners, cyclists, and trail-goers to get in depth statistics on their activities using data manipulated from Garmin exports such as GPX and KML. It will be most useful as a post race application, incorporating 3D Elevation models and floating polylines that represent different athletes, so that the athletes can get a better understanding of both the land as well as their competition.

ArcPy: Scripts created by me for an automated workflow

At work, we deal a lot with raster datasets, below are some scripts i’ve written to automate what we do with them…

SplitRasterWorkflow.py

This was a script I created as a GIS Support Engineer at Mapware. The script was meant to automate the entire GIS Workflow that was both a feeder and a precursor the the ML/AI Model Building process. Because we were building an application to detect habitat, our ML team required both DEM and DSM orthos to be split into 1 acre tiles. This script automated that effort.

Dig_Permit.py

This particular python script was written to automate the process of created and exporting Dig Permit Maps for stakeholders at Joint Base Andrews. The tool uses ArcPy and dependencies such as ArcPy.Mapping, Webbrowser, and others to basically produce and export a complete mapping deliverable in PDF format. The deliverable was a PDF Map consistenting of a specified extent containing all assets/utilities specific to that area. The script replaced the manual effort to produce this deliverable into one that could be automated with the click of a button.

2DBuildings2Mulipatch.py

This script was written by me prior to Christmas 2021. I was working with a 2D Building Footprint feature class and went through the process of converting that FC into a mulitpatch dataset. I was able to use both Bare Earth Elevation Data and Digital Surface Models to create the Mulipatch. After was all was said and done, I created a Python script to automate the process

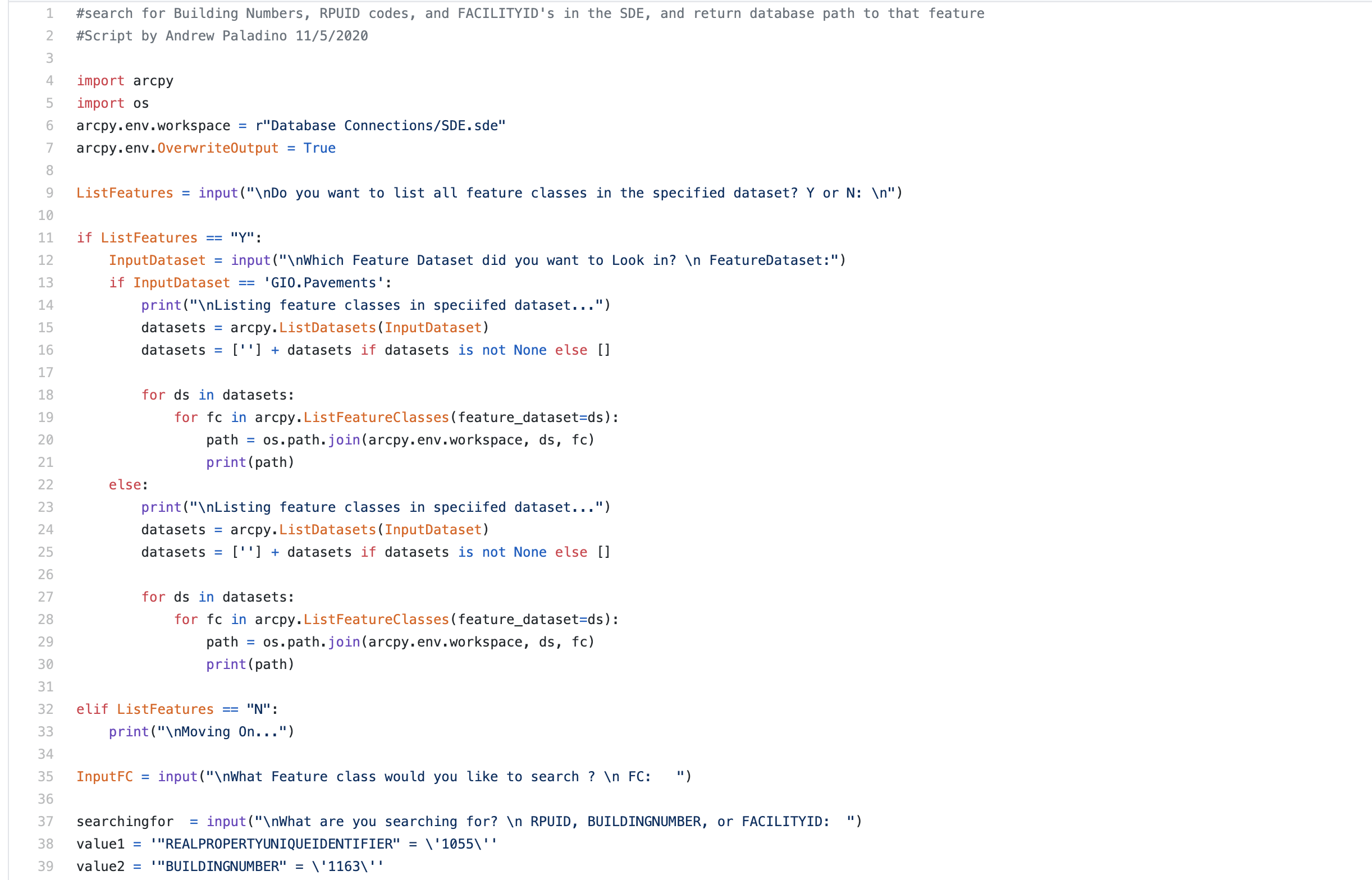

SearchforRPRUID_Build.py

This is a python script I created when I was working heavily in an ArcSDE environment and as a Geospatial Analyst for Woolpert (Andrews Air Force Base). Do in part that the enterprise geodatabase was so large, and contained so many feature classes that fell within multiple feature datasets that fell within one default GDB, I created a script to that incorporates user input to find and filter out specific feature classes in features datasets all the way down to the field level. The script makes use of arcpy.da.Search Cursor to search for specific fields with specific values based on user input. The script will be incredibly helpful not only with database management, but syncing up with the Real Property Office onsite, who maintains records for every feature on base and sets values for those features

IR_photo2point_merge.py

This script will input a multitude of folders with thermal images (captured by a drone), create a geodatabase, run the arcpy tool “Geotagged Photos to Points” on the photos, merge each individual point layer into on feature class, and give the user the option to delete individual feature layers created by the input. The user should be left with a geodatabase containing a point feature class containing points that represent all of the thermal photos taken from a drone survey. I use this script to annotate damage on solar farms.

panelLayer2GeoJSON.py

This script projects a polygon feature class into WGS84 and then converts the newly projected feature class into a .geojson file.. Useful for Solar Inspection Portals hosted on the web.

KMLLayerArea.py

This script uses the arcpy.KMLToLayer_conversion tool to convert a specified input KML/KMZ file into a specified output feature class. The script then uses the arcpy.da.SearchCursor tool to perform an area calculation of the feature class only after the user specifies input area units (sq ft, acres). The script has been useful mainly for sales purposes. The user input is helpful, given that we use square footage to calculate our price of inspection for rooftop surveys, and acres or MWs when bidding for a proposal on the solar side of things.

ResampleRastersFME.py

This script takes the input of two raster datasets from an FME Conversion output, and resamples them so they can load quicker in the ArcMap/ArcPro GUI. Useful for our topography workflow when dealing with massive raster datasets like an RGB Ortho and a DSM.

Raster2MapboxTileset.py

Allows a user an automated way to convert a raster image into a Mapbox Tileset without the annoying black background.

ClipRaster.py

Allows the user an automated way to clip rasters on the backend without hogging the up the ArcMap or ArcGIS Pro GUI. This tool is nice when working with multiple orthomosaics.

ProjectRasters.py

Allows a user to project multiple rasters orthomosaics at once, without having to wait for the GUI to become unresponsive and then responsive again.

Technical Interviews